Each Instance of "AI Utility" Stems from Some Human Act(s) of Information Recording and Ranking

It's ranking information all the way down.

In my previous post, I wrote about the “attentional agency” framework and argued that in many contexts, it is useful to view search engines, content platforms, and generative AI as all trying to address the same “giant ranking task”. They’re all just ranking bundles or chunks of information. This framing also happens to be useful for a related point I like to make — the idea that “every time ‘AI’ provides utility to somebody, at least some of the utility stems from some prior human act of information recording” (which is just another way of restating one of the core points in a wide body of data labour, data leverage, and data dignity related work).

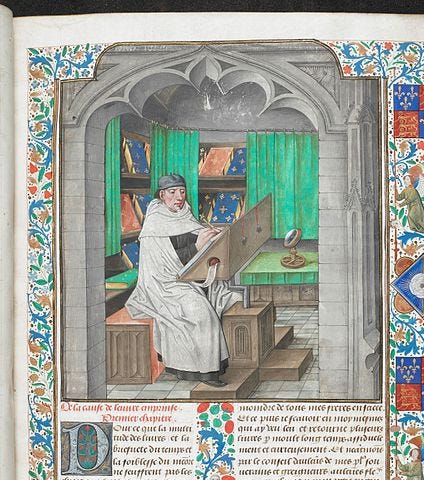

To restate this assertion, which is not really new: each act of AI utility — each time you use ChatGPT, or Google, or TikTok, and get something out of it — ultimately stems from some human act (or set of human acts) somewhere that involved processing, assessing, and recording information. Put simply (and again at risk of stating the obvious), Wikipedia is useful because some other person spent time reading a book or other source and typing out the important information from that source.1

When you use Google to get to that Wikipedia article, the most upstream value creation came about from that same act of “typing out important information”. Google’s employees provided you with additional value by creating a mapping between your query and the article. Note, also, that some of this mapping value also came from Google users and their queries and clicks. There’s an interesting debate to be had about how to allocate credit and value between all these parties, but I think from a counterfactual perspective it’s clear they all play a role, and that the original acts of writing, typing, and recording come “first” (at least chronologically).

If Google helps you find a link to the Apple App Store to download TikTok and then TikTok’s learning algorithm sends you a video you really like, all the parties involved contributed to the ultimate sets of mappings that took you from intent to query to outcome. In this case, that means value is coming from employees and users of Google, Apple, TikTok alongside the creator of the actual content you ultimately consume. Sometimes the intermediate mapping steps work because of careful, hand-selected “signals” (e.g. some kind of bespoke quality classifiers), and sometimes it’s large-scale pattern recognition (e.g. just train a giant user-item collaborative filtering model).

Every helpful interaction with “AI” (that involves consuming information — more on “agents” in a bit) can be viewed as reaping the harvest of cascading attempts to rank information. This framing suggests challenges with creating a sustainable economic model (why did the people who did the original record creation do what they did? Was it altruism, or money? Are they going to keep doing it?). It also suggests a certain “grand mission” — and corresponding sense of sacredness — inherent to the “AI” side of computing. AI is, sometimes, working in service of the eternal human quest to mark trails, create records of what’s poisonous, share stories, and more generally create shared meaning for mutual benefit.

The idea that so much of AI is dependent on human acts of ranking and recording certainly raises some concern, and some questions.

On the negative side: What serious actions are we going to take to create sustainable economics that incentivize and maintain information ranking practices/institutions alongside the more “technical” aspects of data maintenance? Are we careening towards a future of surveillance and precarious data work? Can we still enable the “happy” version of peer produced data, generated by happy, economically stable citizens of the world?

On the positive side: This framing provides some positive energy. Working on AI can bring about good because the field is making progress on a very universal information organization task.

I think both the positive and negative aspects of this particular framing are still very much missing from current AI discourse and the “AI culture” more generally.

What about “AI Agents”? If we send out an “agent” to act in the world and create its own records, do the above claims become false? Generally, I think, the answer is no. Under current architectures, the general fact that any set of “AI” actions can be traced to some human records remains true. Depending on how the agent is actuated (can it spin a motor? “just” make API calls? Does a human click a button before the agent spins a motor up?), there might be more overall information involved, and the end goal might be acting directly on the world instead of delivering information to a user (who can then either act, or not), but all the individual actions can still be traced to information records in the same way. (Note also the massive amount of moral and practical complications that arise when agents “act” in the world; it’s a big deal to hook up a model to a motor or a financial API).

So, even when we send out AI agents into the world (or into constrained miniature worlds, like a digital instance of a chess match), the utility (or disutility) still stems from some human acts of information recording and ranking.

It might be true that there are some contexts in which “agents” and reinforcement learning require substantially fewer overall people than supervised learning, collaborative filtering, or LLM approaches. It might only take one person to write down the rules of chess in a formal programming language. In practice, agents might involve some combination of “one person writes down the environment rules”, some use of imitation learning, some use of “experiential learning” (in which people in the world are conscripted into the learning process). Note also that if an agent uses an LLM for part of its decision-making (e.g. for reasoning), every person who contributed to that LLM training now becomes implicated in the creation of records that agent obtains. So, a robot that goes out in the world and acts is not “person-free”; in some cases, it could be massively collective (if e.g. the robot uses an LLM with millions of people in training data and then interacts with hundreds of people in the present day).

In short, at this point in time I think the use of RL can certainly move us along the spectrum of collectiveness but does not break the fundamental dependency on human acts of recording.

Moving away from the specific question of “How do agents affect all this?” to high-level implications that follow from the fact that “it’s ranking information all the way down”:

AI companies (and AI-using companies) are fundamentally reliant on long-term sustainability of practices and platforms that incentivize and enable people to engage in information recording and ranking acts. This is a point I’ve said before, and will certainly say again!

It’s unlikely we’ll get to a good balance of power between actors that are fundamentally information creating (e.g. a community of Wikipedia editors) vs. fundamentally information using (e.g. a community of people focused purely on model training) without a combination of both (a) truly public interest bodies getting involved in the “information use” components and (b) infrastructure that supports serious collective bargaining around the use of information.

This is why I’m simultaneously an advocate for public AI and for interventions that support collective bargaining for information.

Is this just a tautology (a statement that’s necessarily true in construction)? Are there even counterexamples? Perhaps for reinforcement learning, we could argue that this is not true — an “agent” (or more specifically, the stored representation of a “policy”) is able to create utility (e.g., show you a good chess move) purely because it explored the state space on its own. Isn’t this a case of AI creating utility without a traceable human action? Perhaps. But, there were still some human acts of recording information: somebody had to write down the rules of chess in a formal manner to create a state space to be explored. Perhaps a stronger counterexample would be a fully sensor-integrated system that goes out into the world and reacts to the world using fixed feedback mechanisms that were “programmed” in analog.